Abstract

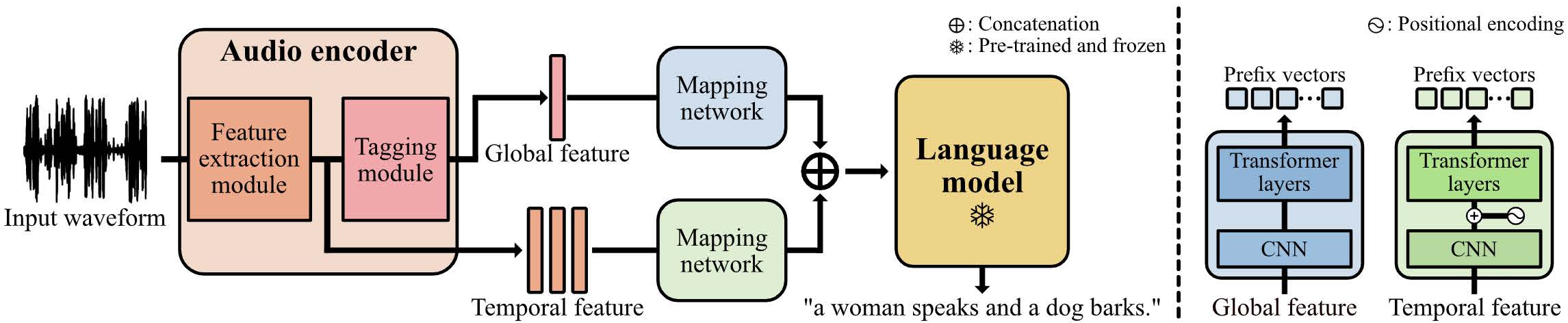

Audio captioning aims to generate text descriptions from environmental sounds. One challenge of audio captioning is the difficulty of the generalization due to the lack of audio-text paired training data. In this work, we propose a simple yet effective method of dealing with small-scaled datasets by leveraging a pre-trained language model. We keep the language model frozen to maintain the expressivity for text generation, and we only learn to extract global and temporal features from the input audio. To bridge a modality gap between the audio features and the language model, we employ mapping networks that translate audio features to the continuous vectors the language model can understand, called prefixes. We evaluate our proposed method on the Clotho and AudioCaps dataset and show our method outperforms prior arts in diverse experimental settings.

Qualitative results

kXjzsroVTtw.wav

"a man speaks as birds chirp in the background"

Lluvia_1.wav

"a heavy rain is falling down and thunder rumbles"

JHhEjsAkZoc.wav

"a train horn blows as it passes by"

Atlantic Ocean Waves.wav

"ocean waves crash against the shore"

Video Presentation

BibTeX

@inproceedings{kim2023prefix,

title={Prefix tuning for automated audio captioning},

author={Kim, Minkyu and Sung-Bin, Kim and Oh, Tae-Hyun},

booktitle={ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={1--5},

year={2023},

organization={IEEE}

}